Building Video Agents

Learn how to create video agents that autonomously generate video content by combining AI models, web search, and the Video Jungle API.

Example Output

Here's an example of a video generated by this agent workflow:

Example video generated by the Nathan Fielder Agent workflow

Introduction

Agents are autonomous programs that can perform complex tasks by combining multiple tools and APIs. In this guide, we'll build an agent that:

- Searches the web for current topics

- Generates voiceover narration

- Downloads relevant video clips

- Creates edited videos with synchronized audio

This example demonstrates building a Nathan Fielder content generator, but the patterns can be adapted for any video generation workflow.

Prerequisites

Before building agents, ensure you have:

-

API Keys:

- Video Jungle API key (

VJ_API_KEY) - Serper API key for web search (

SERPER_API_KEY) - Anthropic API key for AI models

- Video Jungle API key (

-

Python Packages:

I recommend using uv to manage dependencies. First make sure uv is installed, then:

uv init

uv add pydantic-ai videojungle instructor anthropic logfire click

This creates a uv environment, and adds all the packages to your project.

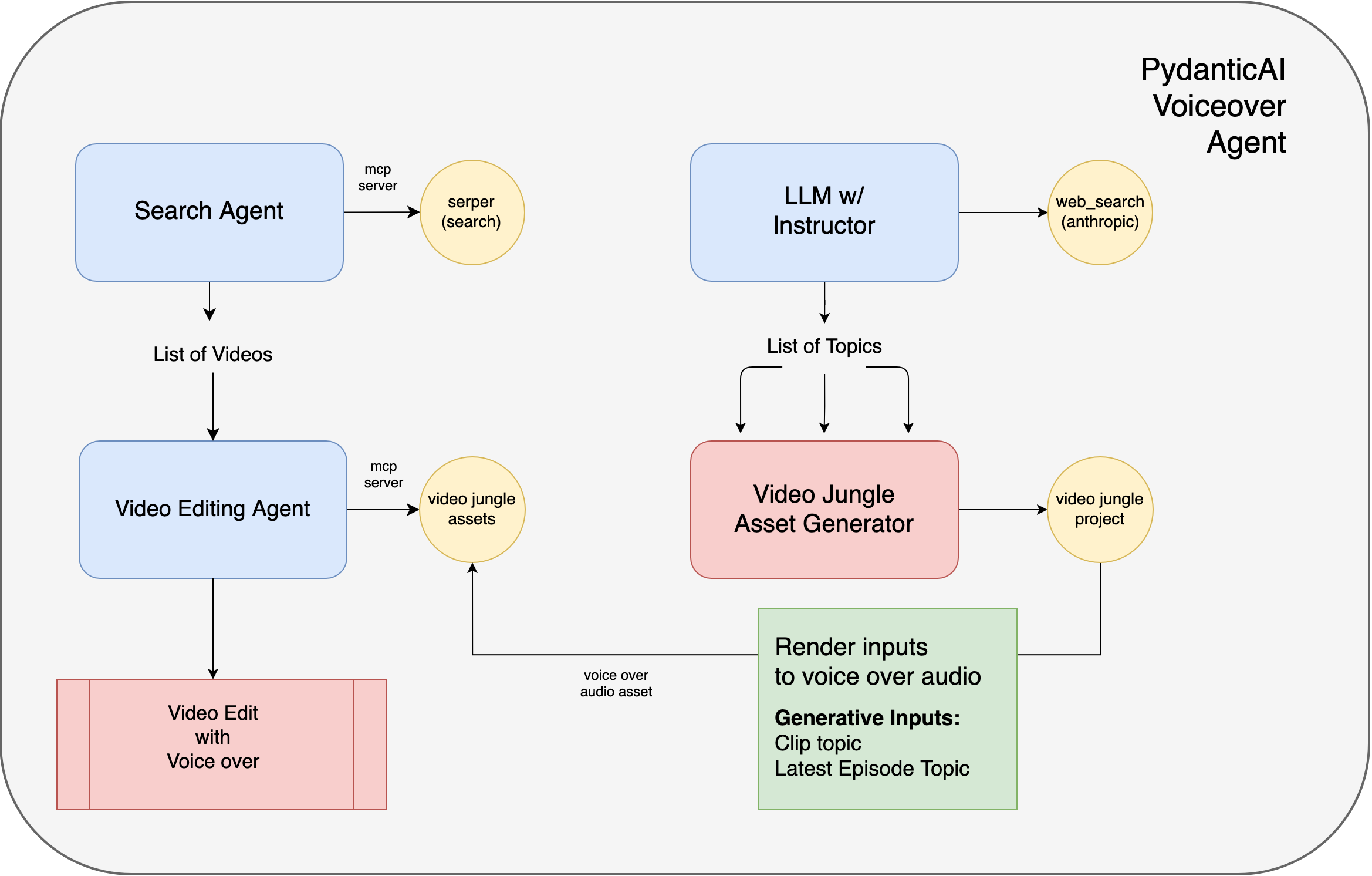

Agent Architecture

Our agent system consists of two specialized agents working together:

The architecture uses Pydantic models to ensure structured outputs from our agents, making the data flow predictable and type-safe.

Setting Up MCP Servers

Model Context Protocol (MCP) servers provide tools that agents can use. We'll set up two servers:

# Video Editor MCP Server - provides video editing tools

vj_server = MCPServerStdio(

'uvx',

args=[

'-p', '3.11',

'--from', 'video_editor_mcp@0.1.36',

'video-editor-mcp'

],

env={

'VJ_API_KEY': vj_api_key,

},

timeout=30

)

# Serper MCP Server - provides web search capabilities

serper_server = MCPServerStdio(

'uvx',

args=[

'-p', '3.11',

'serper-mcp-server@latest',

],

env={

'SERPER_API_KEY': serper_api_key,

},

timeout=30

)

MCP servers run as separate processes and communicate with agents via standard I/O. They provide a secure way to give agents access to external tools without exposing credentials directly.

Creating the Search Agent

The search agent specializes in finding video content from the web:

# Use a cheaper model for search tasks

cheap_model = GeminiModel("gemini-2.5-flash-preview-05-20")

search_agent = Agent(

model=cheap_model,

instructions='You are an expert video sourcer. You find the best source videos for a given topic.',

mcp_servers=[vj_server, serper_server],

output_type=VideoList,

instrument=True,

)

# Using the search agent

async with search_agent.run_mcp_servers():

result = await search_agent.run(

"Find recent Nathan Fielder clips from 'The Rehearsal'",

usage_limits=UsageLimits(request_limit=5)

)

videos = result.output.videos # Structured list of videos

The search agent combines web search capabilities with structured output formatting to return clean, usable video data.

Creating the Edit Agent

The edit agent handles the complex task of creating video edits:

# Use a more capable model for complex editing tasks

good_model = AnthropicModel("claude-sonnet-4-20250514")

edit_agent = Agent(

model=good_model,

instructions='You are an expert video editor, creating fast paced, interesting video edits for social media. ' \

'You can answer questions, download and analyze videos, and create rough video edits using a mix of project assets and remote videos.' \

'By default, if a project id is provided, you will use ONLY the assets in that project to create the edit. If no project id is provided,' \

'you will create a new project, and search videofiles to create an edit instead. For video assets in a project, you will use the type "user" instead of "videofile".' \

'if you are doing a voice over, you will use the audio asset in the project as the voiceover for the edit, and set the video asset\'s audio level to 0 so that the voiceover is the only audio in the edit.',

mcp_servers=[vj_server],

output_type=VideoEdit,

instrument=True,

)

The edit agent has detailed instructions about handling audio levels, asset types, and project management to ensure high-quality outputs.

Generating Audio Content

Before creating video edits, we generate contextual audio narration:

def search_and_render_audio():

# Use Instructor for structured prompt generation

workflow_client = Anthropic()

client = instructor.from_anthropic(workflow_client)

# Search for current topics

search_prompt = """

I'm trying to come up with an interesting spoken dialogue prompt about nathan fielder's the rehearsal season 2.

can you help me come up with ideas for what might be interesting? you can search the web to get up to date info.

"""

# Get structured topic suggestions

resp = client.messages.create(

model="claude-sonnet-4-20250514",

max_tokens=4096,

messages=[{"role": "user", "content": search_prompt}],

tools=[{

"type": "web_search_20250305",

"name": "web_search",

"max_uses": 5

}],

response_model=ClipParameters,

)

# Create a prompt template for voice generation

prompt = vj.prompts.generate(

task="You are a 'The Rehearsal' episode analyzer, diving deep into meta idea to discuss. You aim for 30 second long read script concept that is funny and insightful.",

parameters=["clip topic", "latest episode topic"]

)

# Create project and generate audio

project = vj.projects.create(

name="Nathan Fielder Clips",

description="Clips from Nathan Fielder episodes",

prompt_id=prompt.id,

generation_method="prompt-to-speech"

)

# Generate the actual audio asset

audio = vj.projects.generate(

script_id=project.scripts[0].id,

project_id=project.id,

parameters={

"clip topic": random.choice(resp.clip_topics),

"latest episode topic": resp.latest_episode_topic

}

)

return (project.id, audio['asset_id'])

This workflow demonstrates how to:

- Use AI to research current topics

- Create parameterized prompts for content generation

- Generate audio assets that will guide the video edit

Complete Workflow

The main workflow orchestrates all components:

async def async_main(project_id: Optional[str] = None, asset_id: Optional[str] = None):

if project_id:

# Use existing project

project = vj.projects.get(project_id)

audio_asset_id = asset_id

else:

# Create new project with audio

project_id, audio_asset_id = search_and_render_audio()

project = vj.projects.get(project_id)

# Search and download videos

successful_videos = 0

processed_urls = set()

async with search_agent.run_mcp_servers():

result = await search_agent.run(

"Find recent Nathan Fielder clips",

usage_limits=UsageLimits(request_limit=5)

)

# Download and upload videos to project

for video in result.output.videos:

if video.url not in processed_urls and successful_videos < 5:

processed_urls.add(video.url)

try:

# Download video

safe_title = video.title.replace('/', '-')

output_filename = f"{safe_title}.mp4"

download(video.url, output_path=output_filename)

# Upload to project

project.upload_asset(

name=video.title,

description=f"Agent downloaded: {video.title}",

filename=output_filename

)

successful_videos += 1

os.remove(output_filename) # Clean up

except Exception as e:

print(f"Error processing {video.title}: {e}")

# Wait for video analysis

time.sleep(45)

# Create the final edit

async with edit_agent.run_mcp_servers():

asset = vj.assets.get(audio_asset_id)

asset_length = asset.create_parameters['metadata']['duration_seconds']

result = await edit_agent.run(

f"""Create an edit using all video assets in project '{project.id}'.

Use audio asset '{audio_asset_id}' as voiceover (0 to {asset_length} seconds).

Set all video audio levels to 0. Show outdoor scenes first.

Total video duration must match voiceover duration ({asset_length} seconds).

Create the edit but don't render the final video.""",

usage_limits=UsageLimits(request_limit=14)

)

print(f"Created edit: {result.output.edit_id} in project: {result.output.project_id}")

The workflow handles:

- Creating or reusing projects

- Downloading and managing video assets

- Synchronizing video edits with audio duration

- Error handling and retries

Running the Agent

The agent can be run from the command line:

@click.command()

@click.option('--project-id', '-p', help='Existing project ID to use')

@click.option('--asset-id', '-a', help='Audio asset ID for the edit')

def main(project_id: Optional[str] = None, asset_id: Optional[str] = None):

import asyncio

asyncio.run(async_main(project_id, asset_id))

if __name__ == "__main__":

main()

Best Practices

When building agents with Video Jungle:

1. Error Handling

- Always wrap download and upload operations in try-except blocks

- Keep track of processed URLs to avoid duplicates

- Implement retry logic for network operations

2. Resource Management

- Clean up downloaded files after uploading

- Use appropriate timeouts for MCP servers

- Set usage limits to control API costs

3. Agent Design

- Use specialized agents for different tasks (search vs. edit)

- Choose appropriate models based on task complexity

- Provide detailed instructions to guide agent behavior

4. Audio-Video Synchronization

- Always check audio duration before creating edits

- Set video audio levels to 0 when using voiceovers

- Ensure total edit duration matches audio length

5. Monitoring

- Use logfire for observability

- Instrument agents to track performance

- Log progress for long-running operations

Remember to handle API rate limits and implement appropriate delays between operations. The time.sleep(45) in the example allows time for video analysis to complete.

By following these patterns, you can build sophisticated agents that automate complex video generation workflows while maintaining quality and reliability.